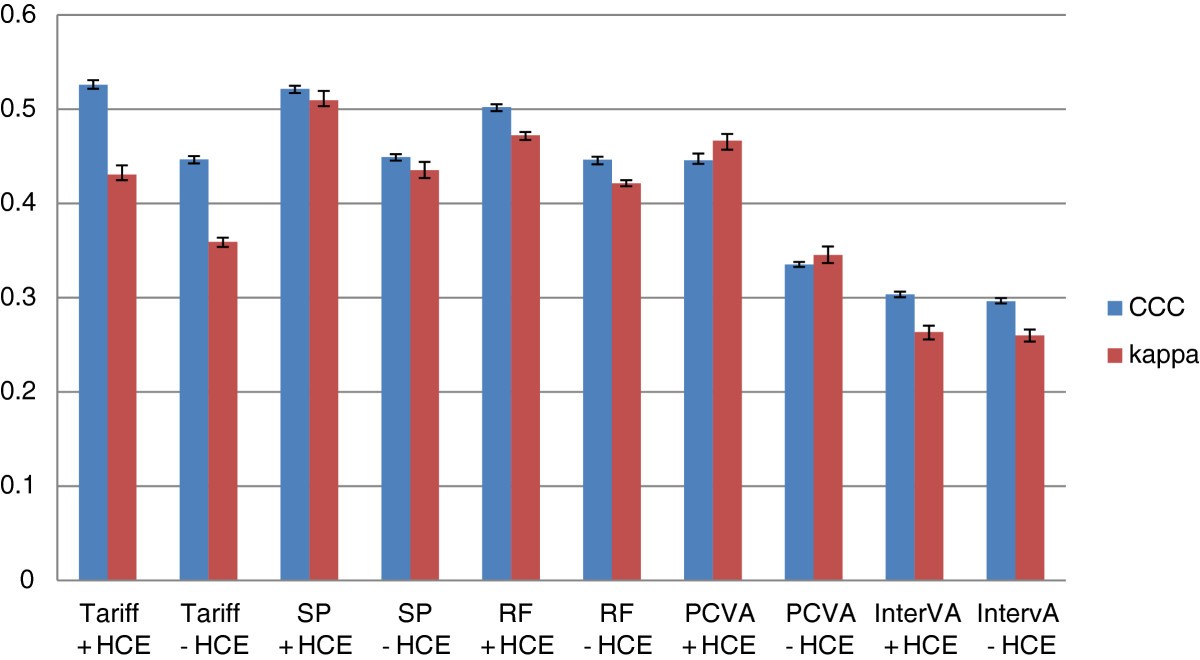

Using verbal autopsy to measure causes of death: the comparative performance of existing methods | BMC Medicine | Full Text

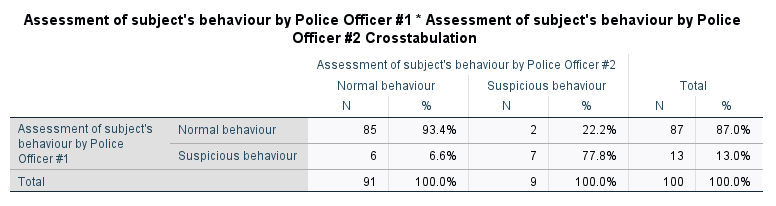

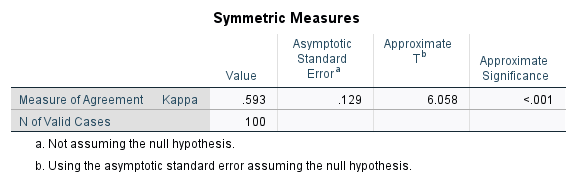

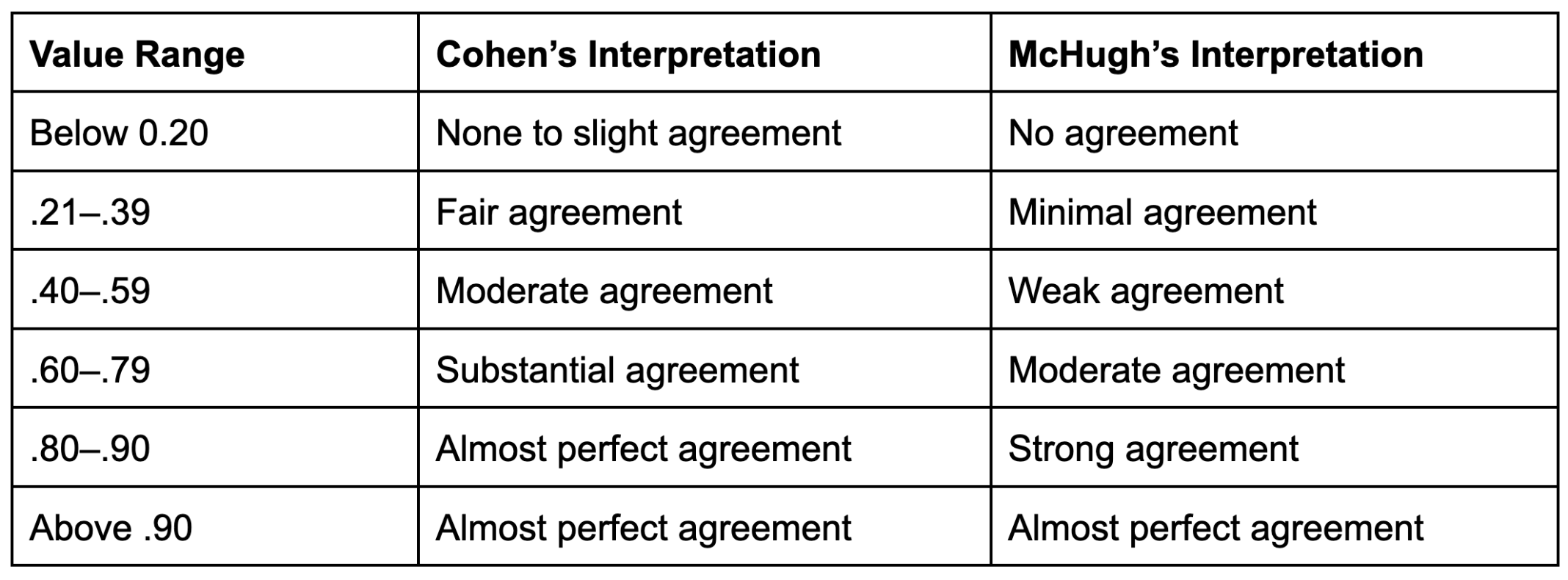

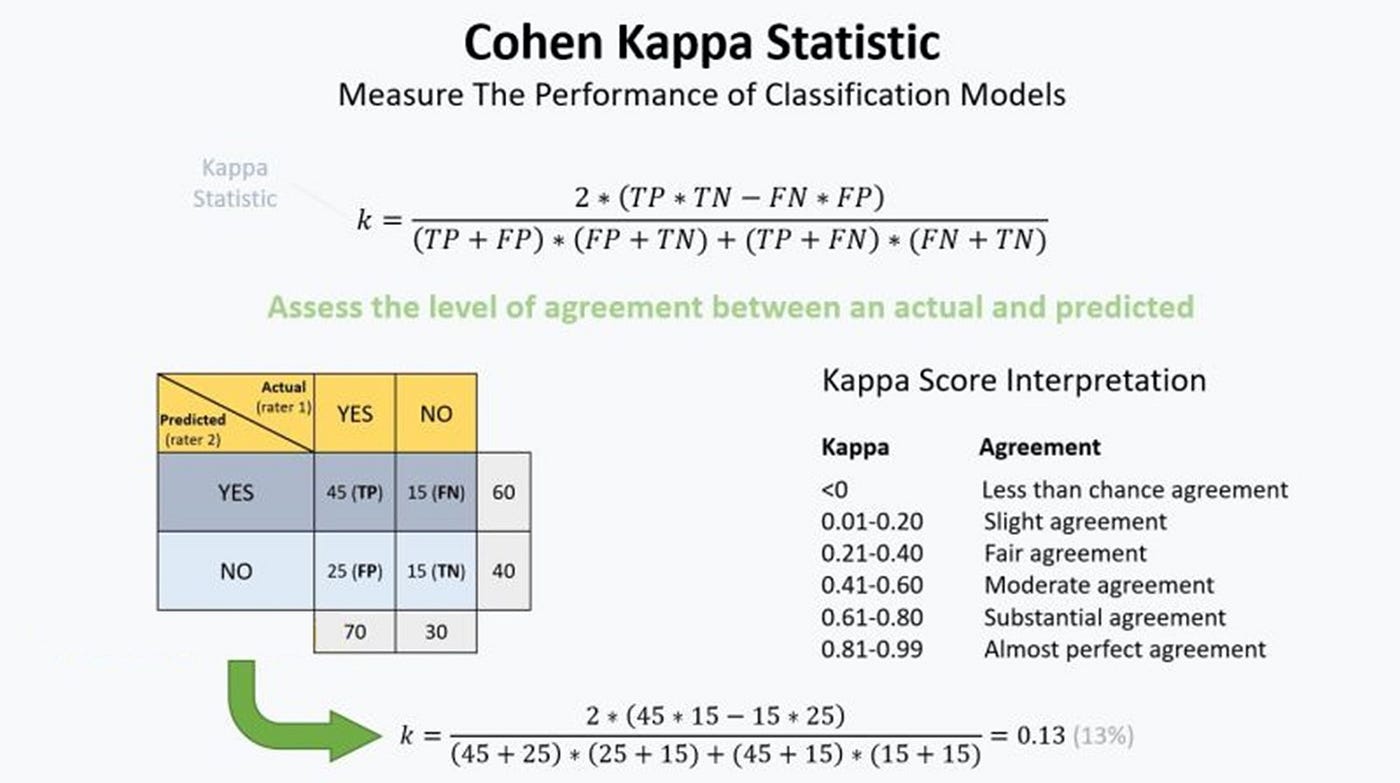

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics

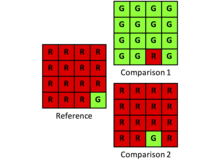

Concordance of actions between the modalities assessed by Cohen kappa... | Download Scientific Diagram

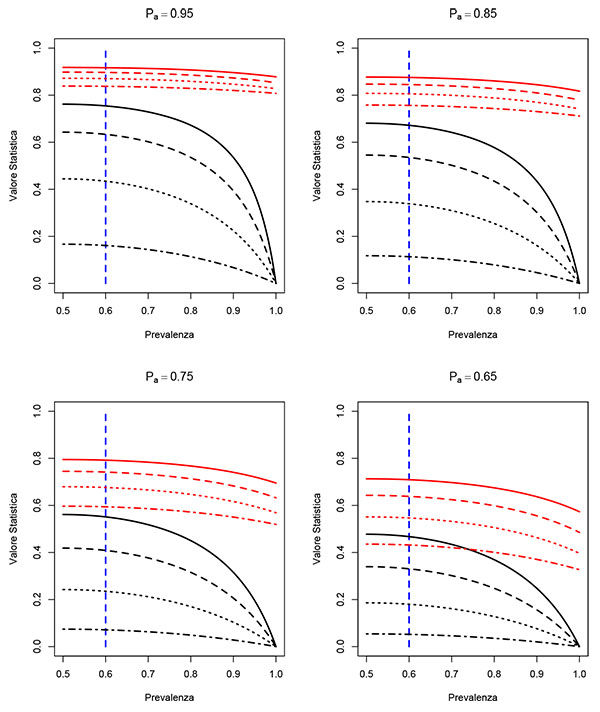

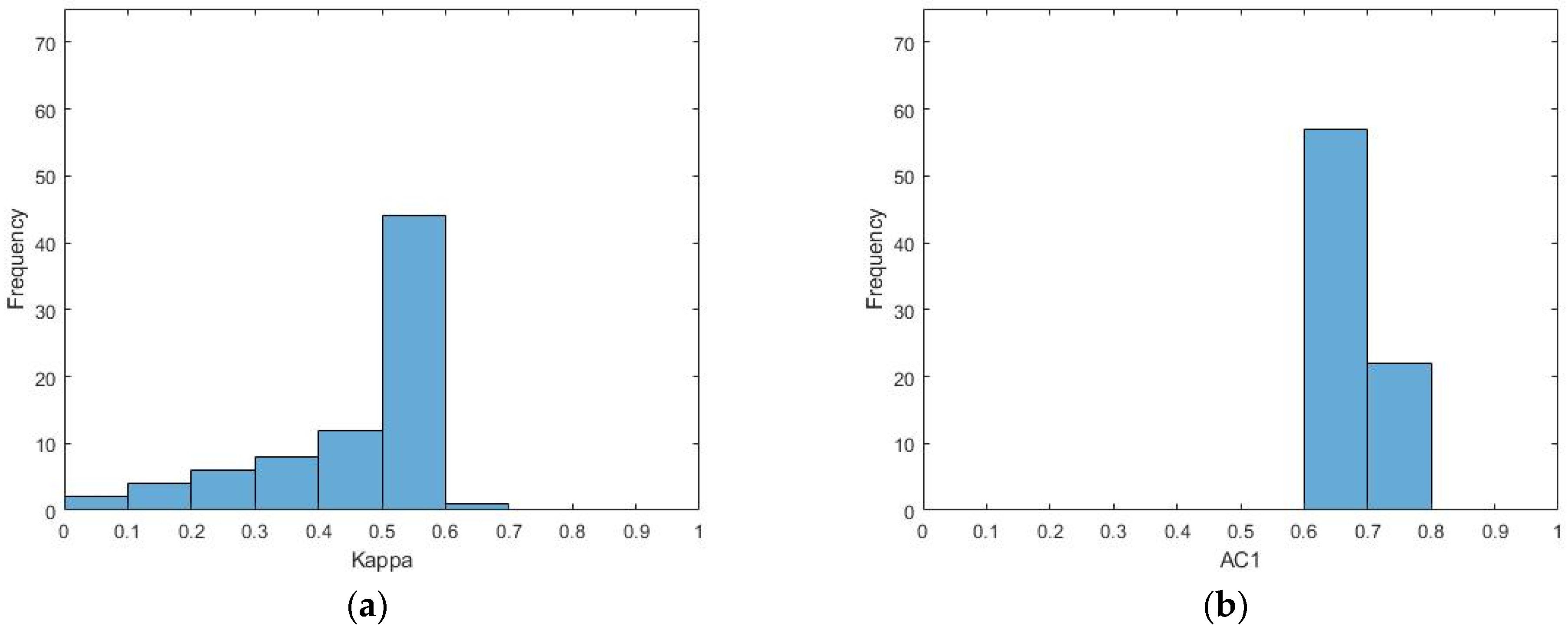

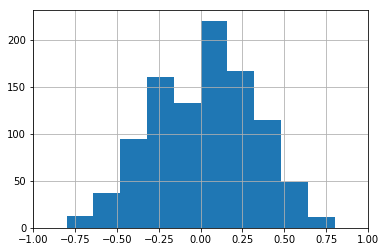

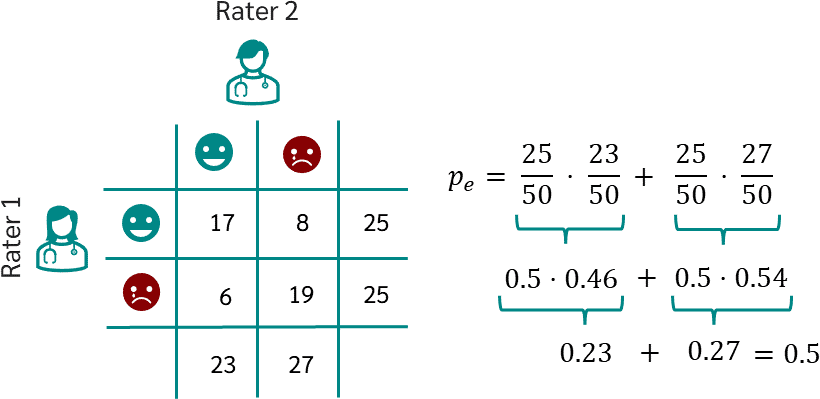

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

![PDF] Sample-size calculations for Cohen's kappa. | Semantic Scholar PDF] Sample-size calculations for Cohen's kappa. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/c59f58e51e97eaa055b450d9f71cac402d7e45ad/3-Table1-1.png)